For students of music theory and audio engineering, the learning curve has always been steep. Understanding how a Mixolydian mode differs from a Dorian mode usually requires years of ear training and instrumental proficiency. However, the rise of the AI Song Generator has inadvertently created a new pedagogical sandbox. By treating music composition as a data-response mechanism, users can now reverse-engineer the emotional mechanics of sound. Instead of learning theory to create music, they can generate music to observe theory in action, testing how specific adjectives and structural requests alter the harmonic “DNA” of a track without needing to play a single note.

Deconstructing The Relationship Between Semantics And Harmonics

The core fascination with this technology lies in its semantic processing. When a user inputs a prompt, the system acts as a translator between human language and musical mathematics. This feedback loop offers a unique educational value. If a user types “anxious” versus “urgent,” the AI makes distinct choices regarding tempo (BPM) and instrumentation. “Anxious” might trigger dissonant string swells and irregular rhythms, while “urgent” might result in a driving, four-on-the-floor percussion pattern.

Treating The Prompt Box As A Variable Control Environment

In a traditional studio, changing a song from “Jazz” to “Lo-Fi” involves re-recording instruments and changing the mix entirely. In this generative environment, it is merely a variable change. This allows for A/B testing of musical concepts. A user can keep the lyrical content identical but swap the genre tag, instantly hearing how the prosody of the lyrics adapts to a swing rhythm versus a quantized trap beat. This rapid switching exposes the structural skeletons of different genres, making the defining characteristics of each style transparent to the listener.

Analyzing The Role Of Neural Networks In Genre Definition

The underlying technology does not “know” music in the human sense; it recognizes patterns. When it generates a “Blues” track, it is essentially executing a probability function that predicts the 12-bar structure and the flattened third and seventh notes. Observing these outputs reveals what the collective dataset considers the “quintessential” elements of a genre. It separates the cliché from the nuance, providing a mirror to our own cultural definitions of musical style.

Implementing A Scientific Approach To Audio Generation

To utilize this tool effectively as a mechanism for understanding sound, one must adopt a systematic approach. The process mimics a scientific experiment: hypothesis (prompt), execution (generation), and analysis (listening).

Phase 1: Formulating The Sonic Hypothesis Via Description

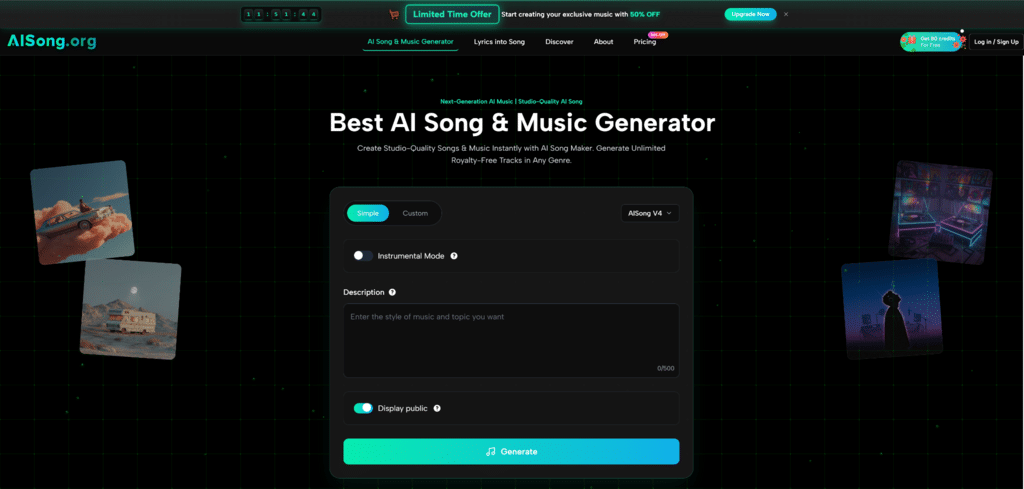

The first step is establishing the control variables. The user inputs the prompt, which acts as the hypothesis. For example, “A classical piece utilizing minor arpeggios to convey sorrow.” This description sets the constraints for the AI, defining the boundaries of the expected output.

Phase 2: Executing The Generation Protocol

Upon activation, the AI processes these constraints. It references its training data to synthesize audio that aligns with the semantic hypothesis. This is not a retrieval of existing audio but a computational construction of new waveforms that fit the description.

Phase 3: Extracting The Data For Analysis

The final step is the download. The user retrieves the MP3 file, which serves as the data point. By analyzing this file—perhaps even loading it into a spectrum analyzer—the user can see how the AI interpreted “sorrow” in terms of frequency balance and chord voicing.

Contrasting Theoretical Study With Empirical Generation

The following table illustrates the shift from abstract theory to tangible experimentation.

| Educational Concept | Traditional Music Theory | AI Music Generator |

|---|---|---|

| Learning Method | Deductive (Rules first) | Inductive (Results first) |

| Feedback Loop | Delayed (Requires practice) | Immediate (Instant playback) |

| Resource Cost | High (Tutors, Instruments) | Low (Browser-based) |

| Experimentation | Difficult (Requires skill) | Unlimited (Requires prompts) |

| Genre Accessibility | Limited to player’s skill | Universal access |

Bridging The Gap Between Technical Vocabulary And Audio Output

One of the most significant barriers for non-musicians is the vocabulary gap. They know what they feel but not the technical term for it. This platform acts as a Rosetta Stone. A user might describe a sound as “echoey and distant,” and the AI generates a track heavy with reverb and high-pass filters. Over time, the user learns to associate their descriptive language with specific audio effects, effectively learning the terminology of production through trial and error.

Leveraging AI For Genre Exploration And Cross-Pollination

The most potent educational outcome is the ability to mash up conflicting genres. Asking the AI for “Country Techno” or “Baroque Rap” forces the system to find commonalities between disparate styles. The results, while sometimes chaotic, often highlight the universal structures that underpin all music—rhythm, melody, and harmony—regardless of the aesthetic packaging.