Introduction

In 2025, AI acts as a master researcher. and navigates the giant dataset to respond with pinpoint accuracy. Recovery-sized generation (RAG) and large language models (LLM) are driving forces, which speed up industries from technology to health care. The AI market goes to $126 billion, with 65% of companies using the Raga-operated system, about 2023 prices, per the industry’s report. From coding platforms to research products, these technologies provide intelligence in everyday workflows, often through smooth, user-friendly interfaces. This is the story of how Raga and LLM change 2025.

How RAG and LLMs Evolved

AI had trouble with context, which caused it to give wrong answers. Early LLMs like BERT were an excellent make to start, but RAG is high by combining models like GPT-4 with real-time data retrieval from documents or databases. The help to make sure the answers are right and take the context into account. LLMs figure out what people mean by “bank,” which can mean either a financial institution or a riverbank. The open-source MiniMax M1, which has a 1M token context window, can process whole datasets like corporate manuals at once. This makes it a game-changer on X. This jump makes people want skilled developers to build these systems. Companies that want to use this technology can hire Retrieval-Augmented Generation (RAG) developer through Upstaff to develop tools that are accurate and based on data. For industries requiring accuracy, RAG and LLMs are now essential.

Real-World Applications

Robots and LLMs are ubiquitous in 2025, providing strength for equipment in industries with remarkable versatility. Here is how they rebuild the workflows in both iconic and unexpected projects, which are often combined with a response to spontaneous user experiences.

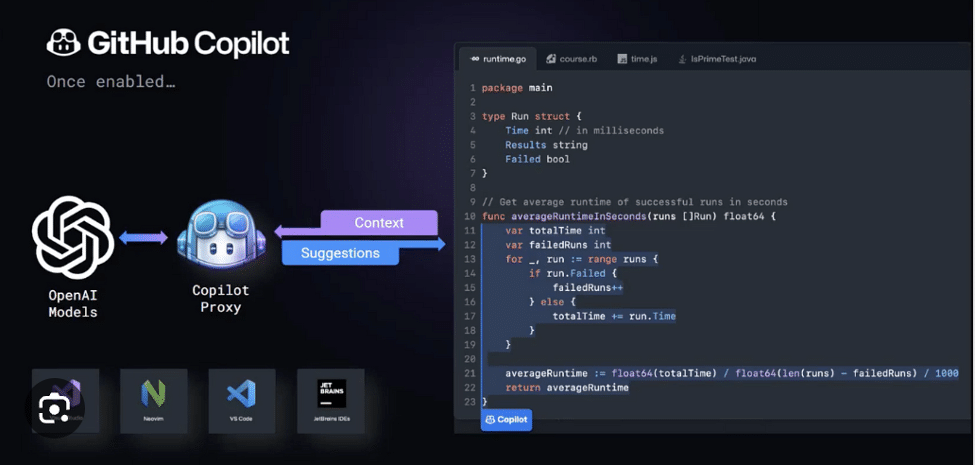

● GitHub Copilot’s Code Suggestions

GitHub Copilot is an LLM with RAG that provides code suggestions based on a project’s context by examining repositories as they are rapidly retrieved and analyzed by the LLM. The interface is built with React and modern Javascript and provides on-the-spot project-specific suggestions for better applications to be developed more quickly, creating a 20% productivity impact, as of Github’s documentation from 2025.

● Notion’s Smart Note Search

Notion’s AI search is able to grab relevant snippets with the use of LLMs while packing results from distant notes using RAG. It is a Tailwind CSS-styled React frontend, as the AI search returns results instantly, saving users potentially hours of searching.

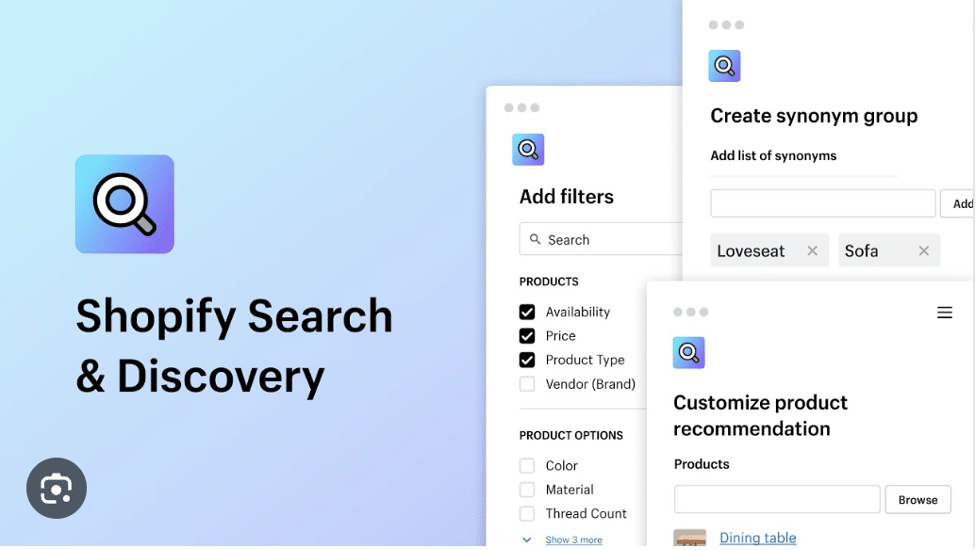

● Shopify’s Product Discovery

Shopify utilizes RAG together with LLMs to map customer searches like “cozy winter jacket” to products, yielding a 15% sales increase in 2025, according to Forbes. Its React-enabled storefront is designed to ensure the search results load quickly to create better shopping experiences.

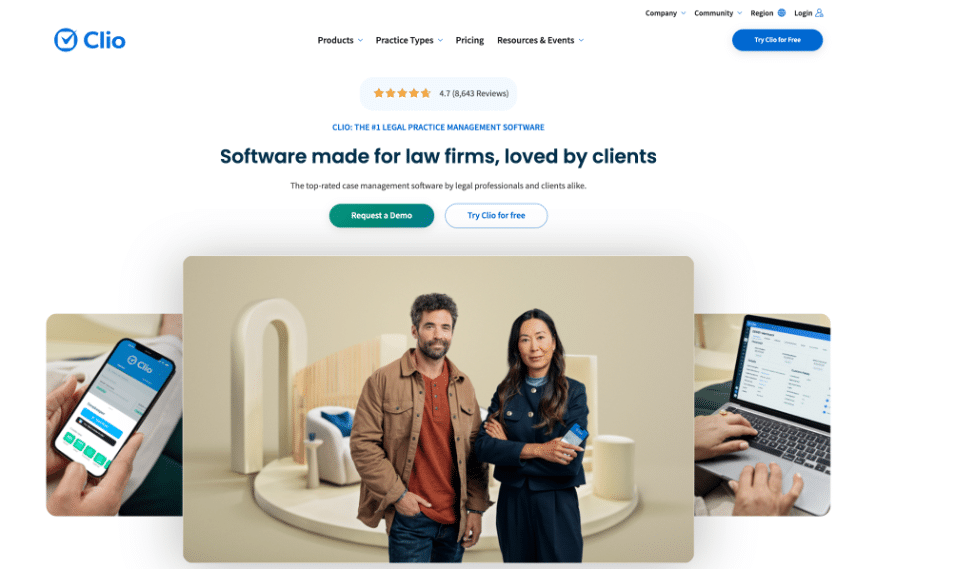

● Clio’s Legal Research

Clio uses RAG to search case files for precedent information and make lawyers workflow more efficient. With its clean presentation of results, Clio’s React interface reduces the time to research for lawyers and law firm teams.

● Grammarly’s Tone-Aware Writing

In 2025, Grammarly released RAG to suggest minor changes to writing based on the tone of the document using LLMs. In its React-based editor, users observe the suggestions being updated through a live view, helping to enhance the clarity and trust of the user.

● Zendesk’s Support Efficiency

With the help of LLMs and RAG, Zendesk has increased the speed of handling support tickets by 30%, sourcing answers from their knowledge bases. Their React-based dashboard keeps agents engaged and productive, fundamentally changing the way customer service operates.

● Slack’s Message Retrieval

Slack’s AI assistant employs RAG with LLM, enabling it to retrieve

● relevant messages or files from team channels for questions like “let me see last week’s project update.” Its React Native interface gives results in-thread, improving collaboration.

● Jira’s Bug Tracking

Jira employs RAG with LLMs for issue tracking, drawing context from the tickets and wikis. Due to its React interface, related issues come up immediately, allowing developers to resolve bugs faster.

● Bloomberg Terminal’s Market Insights

Bloomberg Terminal uses RAG to Elasticsearch to provide real-time market insights by parsing reports and news to help traders. Users get an interactive data visualization on the React frontend. In 2025, user engagement grew by 25%, according to firsthand reports from industry experts.

● Epic Systems’ Patient Care

Epic Systems leverages RAG with LLMs to access patient records and guidelines for doctors. Its React-based electronic health record (EHR) interface enables clinicians to obtain critical insights in seconds and improve care accuracy.

● Perplexity’s Answer Engine

Perplexity is anticipated to be valued at $14 billion in 2025. It utilizes RAG in its conversational search engine, which obtains near-real-time information from news and sources, according to Gulf News. Through its React frontend, Perplexity provides cited answers, distancing itself from Google Search by being transparent about its results.

● HubSpot’s CRM Search

HubSpot introduced a rich research connector with ChatGPT in June 2025, leveraging RAG to search for CRM objects like contacts and deals, per X posts. It uses a React-based dashboard for viewing the contextual results, increasing sales intelligence.

● Colgate-Palmolive’s Consumer Insights

Colgate-Palmolive has engaged RAG to search through its archive of consumer research documents, gaining insights from thousands of documents according to The X Change and beyond. Its React UX is tagged to visualize trends for better product decisions in the year 2025.

● Medical LLM Research

Medical research is tapping into RAG for diagnostics, highlighted by a 2025 study in Scientific Reports that utilized LLMs to pull up clinical guidelines. With React-based dashboards, the results are visualized, helping doctors make real-time decisions.

The range of applications, from coding to consumer goods, demonstrates the utility of RAG and LLMs and, in many instances, their capabilities are further compounded by amply dynamic interfaces built using React. Tools like LangChain, Pinecone, and ChromaDB can narrow down AI to precision, making it available to domains that require it. .

Future Trends and Challenges

By the time we reach 2026, multimodal rag will combine text, images, and audio in a digital landscape in which Google’s Gato model will examine product photographs in conjunction with the product descriptions. The advances in long-context models, such as MiniMax M1, are set to revolutionize fields like legal tech. Privacy is still a challenge: in 2025, 60% of companies prioritize ethical AI (as reported). RAG toolkits like Ragas ensure the outputs produced by RAG are accurate and fair by providing bias tools to maintain public trust around performance.

The Need for Talent

There has been an explosion in job postings for RAG and LLM developers; in Q1 2025 LinkedIn saw a 50% increase compared to Q4 2023. This is because companies are looking to hire developers that know LLMs, LangChain, and React to build RAG tools like Perplexity’s search or HubSpot’s CRM. Upstaff helps companies find developers, whether that is to hire RAG developers to develop next-generation AI systems or if you want RAG developers to find projects at careers/upstaff.com.

Conclusion

RAG and LLMs are igniting AI’s explosive growth in 2025—from GitHub’s coding helpers to Perplexity’s answer engine and beyond. With LLMs, LangChain, PyTorch, Hugging Face, and cloud platforms (AWS, Azure and GCP), companies have been rapidly building intelligence.

Whether you need to hire LLM Engineer for semantic data pipelines, real-time inference, autonomous agent development, or assistance with AI projects, Upstaff.com is definitely a partner to consider for AI, Data, and Web3 solutions.