The digital age provided an avenue for sharing thoughts and feelings. However, maintaining the safety and inclusiveness of this avenue is challenging due to the sheer volume of online content users share. Due to this, the need for content moderation services has become more critical than ever.

The technological advancements brought about by the digital age continue to revolutionize how people live, work, and communicate. More people are joining the online space.

According to Meltwater’s 2024 Global Digital Report, around 5.35 billion people use the internet. This number translates to about 66.2% of the global population. The number of individuals using the internet has increased by 1.8% compared to the previous years. This increase represents 97 million new online users.

People spent more time online in 2023. Online users spend an average of 6 hours and 40 minutes on the internet daily, up by 1% from the average of 6 hours and 36 minutes from the previous year.

Imagine the sheer amount of user-generated content (UGC) produced by billions of people spending over 6 hours online daily. While most UGCs benefit users and businesses, some may threaten user safety and brand reputation.

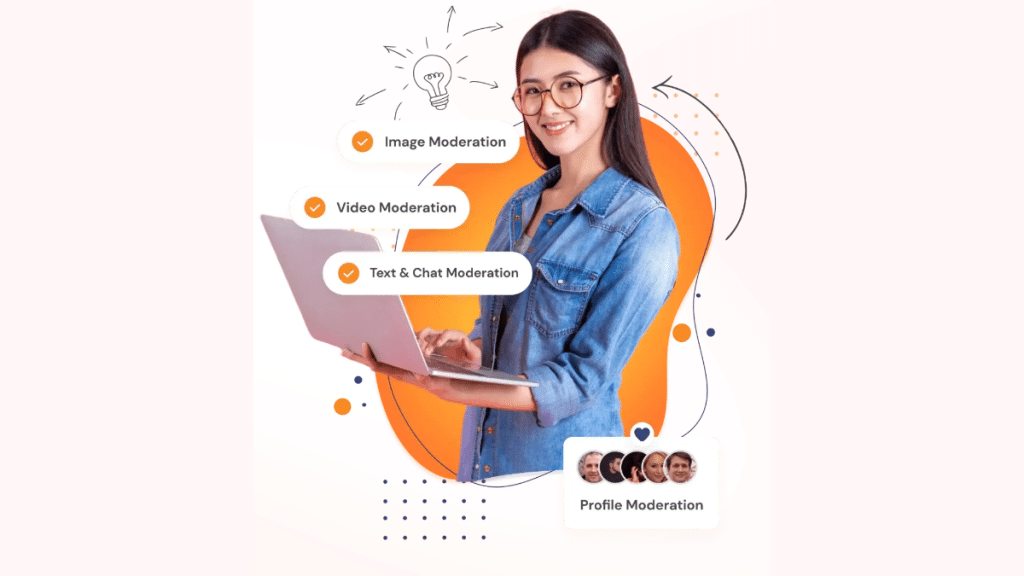

Proper implementation of content moderation prevents the spread of inappropriate content and mitigates potential risks associated with offensive and harmful UGCs.

Social Networking in the Digital Age

The digital age resulted in the sudden rise of social networking platforms. Social media websites have become an integral part of people’s lives. Users can easily connect with others, share their content, and express themselves globally.

Take a look at the following statistics:

● As of 2024, the total number of social media user identities reached 5.04 billion.

● Social media users spend 2 hours and 23 minutes daily using various platforms.

● The most used apps or online platforms in 2024 are messaging apps and social networks.

● Around 94.7% of all internet users aged 16 to 64 reported using chat and messaging apps. Not far behind is social networking. About 94.3% of the same cohort reported using the Internet for social networking.

The combination of human’s innate desire to socialize and the interconnectedness offered by social media platforms blurs the line between personal and public spaces. It paves the way for the creation of virtual communities transcending geographical boundaries.

However, this interconnectivity comes with various challenges related to the increase of UGC. It also heightens potential issues like privacy breaches, misinformation, and online harassment. Implementing effective social media moderation services can mitigate these UGC-related risks.

The Importance of Content Moderation in the Digital Age

The increasing number of people using the Internet to express themselves necessitates effective content moderation. The sheer amount of users and UGCs increases the difficulty of maintaining a safe and constructive environment in the digital age. Content moderators keep a watchful eye on what the users share on the platform.

Websites and online platforms have sets of rules regarding UGCs. The community standards and guidelines outline these rules. It is the role of a content moderator to enforce this rule. They monitor UGCs to ensure no content violating the guidelines spread on the platform.

Content moderation is also responsible for protecting users from potential risks. The online space is not free from malicious activities such as scams, trolls, and other forms of online harassment. Moderators identify and remove UGC that can be harmful or illegal.

The primary source of information in this digital age is the Internet. Users with ill intent may use the Internet to spread inaccurate or misleading content. Content moderation services keep the online space more trustworthy by identifying and addressing false information.

Moreover, effective content moderation helps build brand integrity and reputation. Timely and accurate removal of inappropriate content represents a company’s commitment to maintaining a safe and respectful online space for user engagement. This commitment builds trust among the user base, making them more likely to participate in discussions.

Content Moderation for Social Media

Social media offers an opportunity for businesses to maximize their reach and increase their online presence without extravagant costs. However, these social networking platforms rely on UGCs for their activities. Maintaining a safe and respectful social media environment requires curating vast amounts of UGCs and filtering inappropriate content.

What does a social media content moderator do? The primary goal of content moderation in social media platforms is to maintain a safe, respectful, and inclusive online space for users. Moderators achieve this goal by carefully reviewing content and filtering those violating the community standards and guidelines.

Community guidelines outline acceptable behavior and content within the platform. It may vary across different websites and apps. Most guidelines cover a range of issues, including hate speech, harassment, nudity, violence, profanity, and more. A content moderator for social media enforces these guidelines, ensuring that every user in the platform adheres to them.

Keeping a Clean Online Environment Through Effective Moderation

The need for effective and efficient content moderation solutions increases as users continue to create UGC. Content moderation goes beyond simply deleting posts. It involves proper enforcement of the community standards and guidelines.

Skillful content moderators can also help prevent the spread of fake news. An online community free from inappropriate content and misinformation enhances user safety and increases brand integrity.